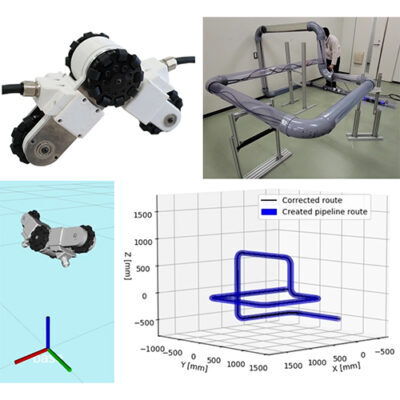

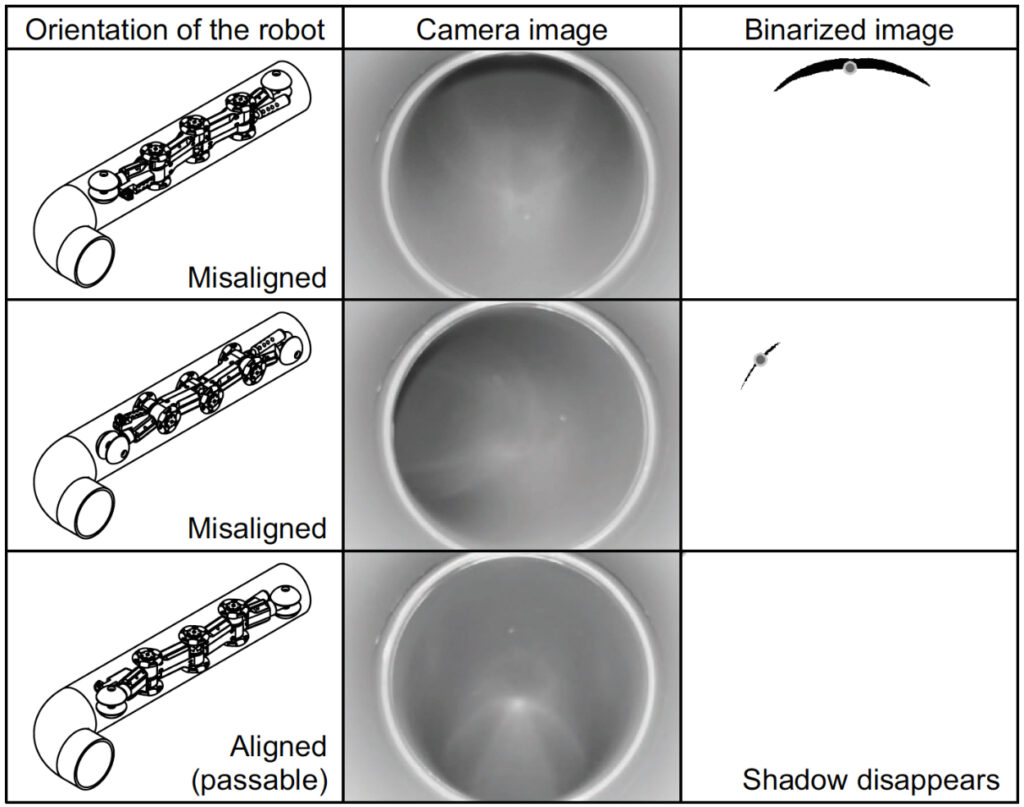

In our previously developed robot “AIRo”, the operator had to operate it only from a two-dimensional camera image, and had to adjust the rolling direction and the robot orientation appropriately using only a hand-held manual controller. This requires skill, and it takes time to become accustomed to the operation. In this study, we propose a method to reflect the direction in which the robot should roll in the rotation of the spherical wheel by using a crescent-shaped shadow image that appears in the camera image when the robot is approaching a curved pipe.

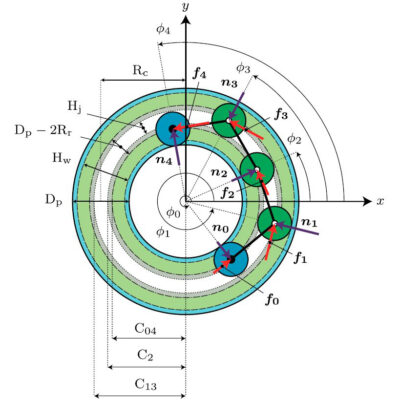

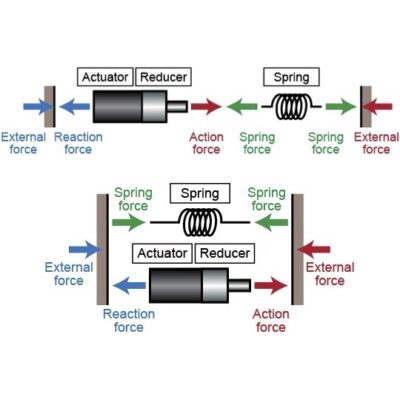

The principle is explained below. The shadow image appears when the illumination is positioned close to the path of the curved pipe in relation to the camera, and disappears when the illumination is positioned far from the path of the curved pipe. When the robot’s orientation is rotated around the pipe axis from the state where the shadow appears, the crescent-shaped shadow gradually becomes smaller in a circle in the camera image and eventually disappears. If the robot is given the command to rotate the spherical wheel in the direction of the shadow when the shadow appears and to rotate the driving wheel to move forward when the shadow disappears, the operator only needs to send a simple command to move the robot forward, backward, or stop, and does not need to operate the spherical wheel in accordance with the direction of the curved pipe.

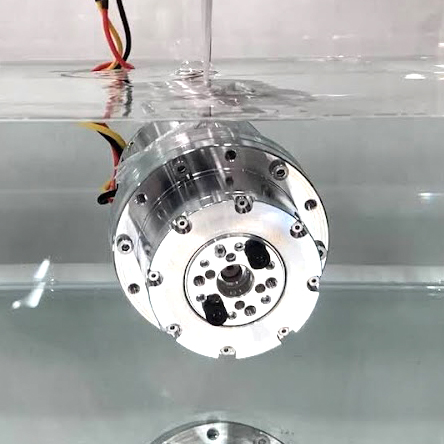

Here, we focus on two points: the “orientation dependence of passing through a curved pipe,” in which the robot can only pass through a curved pipe at a certain orientation in the direction of its path, and the “orientation dependence of shadow appearance,” in which a crescent-shaped shadow disappears only at a certain orientation in the direction of the curved pipe’s path. By superimposing these two points, automatic orientation adjustment of the robot is made possible. In addition to realizing a compact automatic orientation adjustment system with a very simple configuration consisting only of a staggered arrangement of cameras and lighting, the system also maximizes its functionality by utilizing the cameras and lighting not only for inspection but also for automatic robot orientation adjustment (killing two birds with one stone). The space inside the pipe is too restricted to allow multiple sensors to be mounted on the robot. A camera and lighting are necessary to inspect the dark and narrow interior of the pipe, but automatic orientation adjustment is achieved without adding any additional sensors or other components.

When the robot reaches the curved pipe, the automatic orientation adjustment function begins to operate as described above. However, since a shadow appears in the center of the camera image even in a straight pipe, the robot may start to rotate in the straight pipe if the same system is operated. Therefore, we have devised a unique binarization process to extract only the shadow image in the curved pipe from the camera image. In a straight pipe, the image gradually darkens from the outside to the center, whereas in a curved pipe, the front wall reflects the illumination light, so there are many bright areas in the camera image, and only the shadow areas appear locally dark.

In this study, based on this difference in brightness distribution between straight and curved pipes, we focused on the large or small dispersion value of the luminance value versus the number of pixels to automatically discriminate straight and curved pipes in advance. By measuring the trend in the appearance of such dispersion values in advance, the system can be used for pipes with different reflectance, such as steel and plastic pipes. This system can also be used for pipes with different reflectance, such as steel and plastic pipes, by measuring the trend of the dispersion values in advance. Experiments have been conducted on a 7.5-m-long opaque simulated pipe that includes seven curved pipes and a vertical section, and it has been confirmed that the system can complete the run without human operation.

This result was achieved by the joint research with BioInMech Lab, Ritsumeikan University. The project was granted by the Japan Science and Technology Agency (JST) through the "Autonomous Control and Mapping of a Piping Inspection Robot Based on Visual Information," a project to select and foster technology seeds (in the field of robotics) of the START Program for the Creation of New Industry from Universities.

Publications

- Atsushi Kakogawa, Yuki Komurasaki, and Shugen Ma, Shadow-based Operation Assistant for a Pipeline-inspection Robot using a Variance Value of the Image Histogram, Journal of Robotics and Mechatronics, Vol. 31, Iss. 6, pp. 772-780, 2019

- Atsushi Kakogawa, Yuki Komurasaki, Shugen Ma, Anisotropic Shadow-based Operation Assistant for a Pipeline-inspection Robot using a Single Illuminator and Camera, Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2017), pp. 1305-1310, 2017

- 小紫由基,加古川篤,馬書根,影画像情報に基づく配管検査ロボットのエルボー管内自動走行システム,日本機械学会ロボティクス・メカトロニクス講演会 2016,2P2-14a5,2016

Previous studies that served as references for this study

- J. Lee, S. Roh, D. W. Kim, H. Moon, and H. R. Choi, In-pipe Robot Navigation Based on the Landmark Recognition System Using Shadow Images, in Proc. of the IEEE Int. Conf. Robotics and Automation, pp. 1857-1862, 2009

- D. H. Lee, H. Moon, and H. R. Choi, Landmark Detection Methods for In-pipe Robot Traveling in Urban Gas Pipelines, Robotica, Vol. 34, Iss. 3, pp. 601-618, 2016